Distributed GraphQL with Hasura and Supabase

Your apps will run faster if the APIs they call are physically located close to your end users. This tutorial will use CloudFlow to deploy the open-source Hasura container to multiple datacenters. We will configure it to use Supabase as the Postgres database backend.

The Hasura container we will use is available on DockerHub.

note

Before starting, create a new CloudFlow Project and then delete the default Deployment and ingress-upstream Service to prepare the project for your new deployment.

Prerequisites

- You need an account on Supabase.

Create a Database on Supabase

Create a new Postgres instance for Hasura to connect to. For this tutorial we will be using Supabase as they provide free, managed Postgres instances. But any Postgres database will work.

- Visit https://app.supabase.com/ and click "New project".

- Select a name, password, and region for your database. Make sure to save the password, as you will need it later.

- Click "Create new project". Creating the project can take a while, so be patient.

- Once the project is created, navigate to the "Database" tab on the left.

You have just created an empty database on Supabase. Hasura will populate it with metadata upon first connection.

Get Your Connection String

Your connection string will be of the form postgresql://postgres:[YOUR-PASSWORD]@[YOUR-SUPABASE-ENDPOINT]:5432/postgres. An example (with mock credentials) would look like, postgresql://postgres:abc1234@db.abcxyzabcxyzabcxyzabcxyz.supabase.co:5432/postgres.

- Go to Settings, and then Database.

- Scroll down to the "Connection string" section and copy the connection string from the "URI" tab. (Do not use the connection string in "Connection pooling", as Hasura is doing connection pooling of its own.)

- Insert the password you saved earlier into the string at

[YOUR-PASSWORD].

Create a Deployment for Hasura

Next, create the deployment for Hasura as hasura-deployment.yaml. This will direct CloudFlow to run the Hasura open source container. Substitute YOUR_CONNECTION_STRING accordingly. And supply a Hasura console password in YOUR_ADMIN_PASSWORD.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hasura

name: hasura

spec:

replicas: 1

selector:

matchLabels:

app: hasura

template:

metadata:

labels:

app: hasura

spec:

containers:

- image: hasura/graphql-engine

imagePullPolicy: Always

name: hasura

resources:

requests:

memory: ".5Gi"

cpu: "500m"

limits:

memory: ".5Gi"

cpu: "500m"

env:

- name: HASURA_GRAPHQL_DATABASE_URL

value: YOUR_CONNECTION_STRING

- name: HASURA_GRAPHQL_ENABLE_CONSOLE

value: "true"

- name: HASURA_GRAPHQL_ADMIN_SECRET

value: YOUR_ADMIN_PASSWORD

Apply this deployment resource to your Project with either the Kubernetes dashboard or kubectl apply -f hasura-deployment.yaml.

Expose the Hasura Console on the Internet

We want to expose the Hasura console on the Internet. Create ingress-upstream.yaml as defined below.

apiVersion: v1

kind: Service

metadata:

labels:

app: ingress-upstream

name: ingress-upstream

spec:

ports:

- name: 80-80

port: 80

protocol: TCP

targetPort: 8080

selector:

app: hasura

sessionAffinity: None

type: ClusterIP

Apply this service resource to your Project with either the Kubernetes dashboard or kubectl apply -f ingress-upstream.yaml.

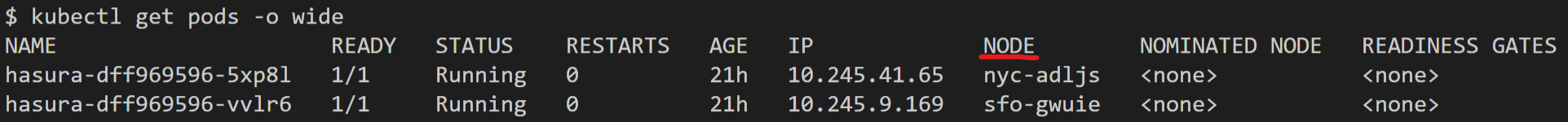

See the pods running on CloudFlow's network using kubectl get pods -o wide.

The -o wide switch shows where your GraphQL API is running according to the default AEE location optimization strategy. Your GraphQL API will be optimally deployed according to traffic.

Finally, follow the instructions that configure DNS and TLS.

Experiment with Hasura

Now, you can start using Hasura. While the main purpose of the Postgres database is to store Hasura metadata, note that you can use the Data section of the Hasura console to create tables of your own in the same Postgress database, and then use Hasura's querying ability to access that data.