TL;DR

In tests, Section proved to be 7.04x faster than cloud at the 95th percentile, while maintaining the same spend level.

Today, we are excited to announce the results of a recent series of tests to determine the performance benefits of Section’s Adaptive Edge Engine (AEE).

The AEE (patent pending) is a dynamic, context-aware workload scheduling and traffic routing technology. Our ML forecasting engine dynamically provisions edge resources and routes traffic in response to real-time demands. This allows developers to take advantage of bespoke, dynamic edge networks, optimized across a range of attributes, including performance, cost, security/regulatory requirements and carbon-neutral underlying infrastructure.

For more background on the strategic thinking behind the AEE, check out this article - Creating the Mutual Funds of Edge Computing.

AEE vs. the Cloud

We recently ran a set of experiments comparing an application running in Section controlled by the AEE against the same application running in a major cloud provider. We have not sought at this stage to define the scalability or size of the system’s capabilities (this will come later!), but rather to demonstrate a set of basic actions and outcomes under controlled conditions.

Test Design

Our test design involved the following parameters for the test agents:

- We built three geographically distributed servers to generate HTTPS traffic, situated in: US East, Asia East, Europe West.

- Each test agent executed the same traffic pattern, starting at 9am local time for each region.

- The load pattern represents a standard 24 hour period of traffic: busy in the day and quiet at night.

- We developed the tests in JMeter.

For the test targets, we created a Node.js application to respond to the JMeter agents with an HTTP 200 OK status code. The application was deployed into Section and the major cloud provider without any changes to get it working across both platforms.

The Node.js application deployed in Section was configured to use one of the AEE’s pluggable optimization strategies. In this case, we used a Minimum Request Location Optimizer Strategy. This strategy involved deployment of the application to Points of Presence when the geographic source of traffic closest to a PoP was above 25 requests per second. In the major cloud provider, the application was deployed statically, as in single datacenter hosting.

Test Results

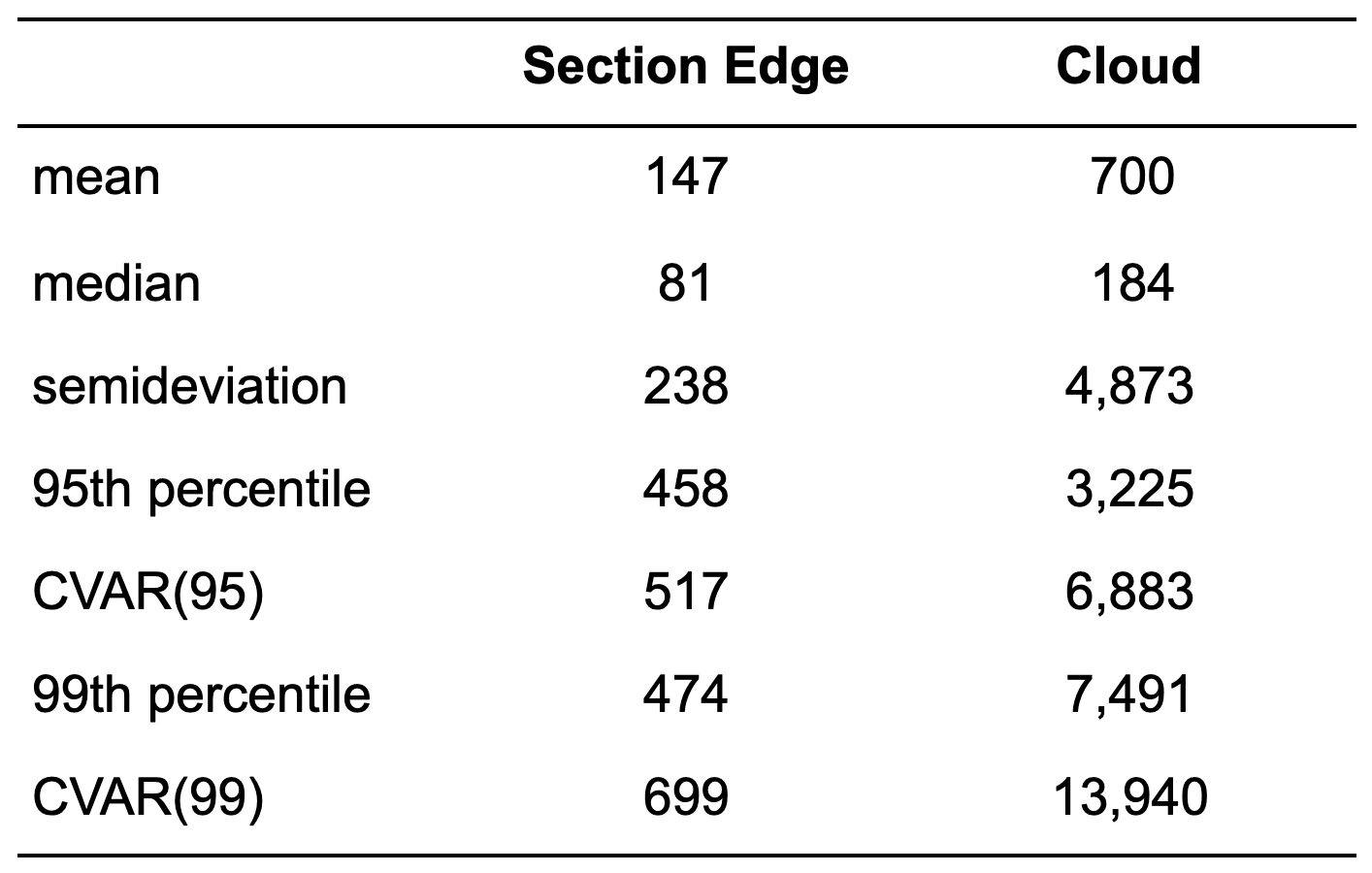

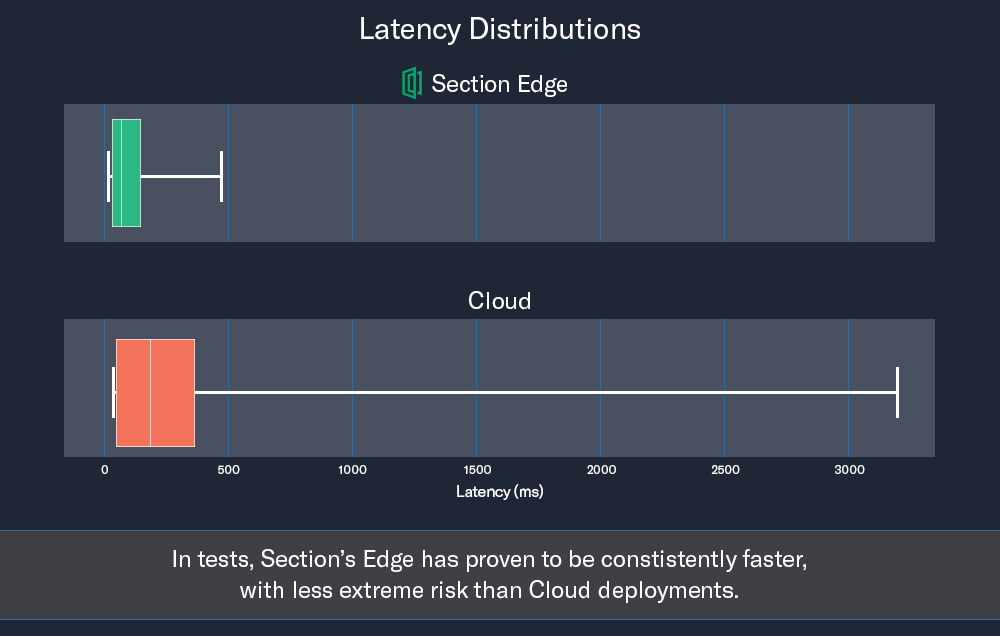

The test patterns created approximately 5.1 million responses per case. Across these observations, Section Edge case latency had a mean of 147 milliseconds (ms) and median of 81 ms. The latency for the Cloud case had a mean of 700 ms and median of 184 ms.

Distributions of HTTP latency are right-skewed, as indicated by mean values that exceed their medians. To meaningfully characterize the spread of the right tail of the distribution, we computed the semideviation. The semideviation is an analogue of the standard deviation that only considers the values above (or below) the mean. The “upper” semideviation used here summarizes the risk of slower-than-average performance. The semideviation of Section Edge latency was 238 ms and that of Cloud latency was 4,873 ms.

At the extremes, the 95th and 99th percentiles were 458 ms and 474 ms for Section Edge and 3,225 ms and 7,491 ms for Cloud. A statistic that characterizes risk at the extreme tail of a distribution is the conditional value at risk (CVAR). The CVAR is the average of values that exceed a specific percentile of the distribution; the average outcome in the tail. The CVAR(95), the mean latency above the 95th percentile for Section Edge is 517 ms and for Cloud it was 6,883 ms. This can be interpreted as: end-users experiencing latency in the upper 5% of the distribution, will see average latency of 517 ms and 6,883 ms for the Section Edge and Cloud cases, respectively. The CVAR(99) values are 699 ms for Section Edge and 13,940 ms for Cloud.

Analysis of the Test Results

The results indicate that making requests of an app deployed on Section Edge is consistently faster, with markedly less extreme risk than making requests of the exact same application with a Cloud deployment.

The Section Edge latency distribution has lesser mean and median than the Cloud distribution and the semideviation (measure of spread above the mean) is an order of magnitude less for the Section Edge case than the Cloud case.

The 95th and 99th percentiles of the Cloud distribution are 7 or more times greater than for Section Edge. The mean latency at the extremes of the distributions (e.g. CVAR) are an order of magnitude less for the Section Edge case compared to the Cloud case. The CVAR, as an arithmetic average, is sensitive to the spread of values in the upper tail region under consideration. The very high value of CVAR(99) for the Cloud case shows us that the upper 1% of latency values are of very large magnitude. The CVAR(99) for the Cloud case is nearly 20 times the mean of that distribution (recall that the only observations that were analyzed were those with response codes of 200). On the other hand, the CVAR values of the Section Edge latency distribution are less than 3 times the magnitude of the mean. In fact, the average latency for the slowest 1% of Section Edge users (i.e. CVAR(99)) is equal to the average latency of all users in the Cloud case.

The value proposition of edge compute

Successful use of edge compute will necessitate the use of automated systems to dynamically migrate workloads into, out of, and across a rapidly growing pool of edge Points of Presence (PoPs). Despite its performance benefits, edge computing will simply be too expensive for many workloads without this kind of automated workload orchestration.

Workload Orchestration

An intelligent system which dynamically determines the optimal location, time and priority for application workloads to be processed on the range of compute, data storage and network resources from the centralized and regional data centers to the resources available at both the infrastructure edge and device edge. Workloads may be tagged with specific performance and cost requirements, which determines where they are to be operated as resources that meet them are available for use. - Open Glossary of Edge Computing v2

Sustainability matters

The benefits of Section’s Adaptive Engine are not limited to latency improvements and cost efficiencies, but also enable us to contribute to wider tech sector efforts to build a more sustainable future. With projections of 6 billion Internet users by 2022 (75% of the world’s population), efforts to reduce tech-related energy consumption are crucial. Section’s AEE tunes the requested compute resources at the edge continuously in response to performance, traffic served and the application’s required compute attributes. We use the minimal amount of compute necessary to provide maximum availability and performance.

For a deeper dive on how Section’s AEE drives more sustainable computing models, check out this recent talk from Dr. Kurt Rinehart, Section’s Director of Information Engineering: Comparing Cloud vs Edge efficiencies using context-aware, location-optimized workload scheduling.

Keep an eye out for our next post in this series, digging into the inner workings of the AEE.